Spam Filter using Logistic Regression

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

import matplotlib.pyplot as plt

from wordcloud import WordCloud

from math import log, sqrt

import pandas as pd

import numpy as np

import re

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.metrics import accuracy_score,confusion_matrix,classification_report

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import os

import nltk

nltk.download('punkt')

[nltk_data] Downloading package punkt to

[nltk_data] C:\Users\I330087\AppData\Roaming\nltk_data...

[nltk_data] Unzipping tokenizers\punkt.zip.

True

# Dataset can be downloaded from https://www.kaggle.com/uciml/sms-spam-collection-dataset/data

messages = pd.read_csv("Dataset/spam.csv",encoding="latin-1")

messages.head()

| v1 | v2 | Unnamed: 2 | Unnamed: 3 | Unnamed: 4 | |

|---|---|---|---|---|---|

| 0 | ham | Go until jurong point, crazy.. Available only ... | NaN | NaN | NaN |

| 1 | ham | Ok lar... Joking wif u oni... | NaN | NaN | NaN |

| 2 | spam | Free entry in 2 a wkly comp to win FA Cup fina... | NaN | NaN | NaN |

| 3 | ham | U dun say so early hor... U c already then say... | NaN | NaN | NaN |

| 4 | ham | Nah I don't think he goes to usf, he lives aro... | NaN | NaN | NaN |

messages.drop(['Unnamed: 2','Unnamed: 3','Unnamed: 4'],axis = 1,inplace=True)

messages.head()

| v1 | v2 | |

|---|---|---|

| 0 | ham | Go until jurong point, crazy.. Available only ... |

| 1 | ham | Ok lar... Joking wif u oni... |

| 2 | spam | Free entry in 2 a wkly comp to win FA Cup fina... |

| 3 | ham | U dun say so early hor... U c already then say... |

| 4 | ham | Nah I don't think he goes to usf, he lives aro... |

messages = messages.rename(columns={"v1":"label","v2":"text"})

messages.head()

| label | text | |

|---|---|---|

| 0 | ham | Go until jurong point, crazy.. Available only ... |

| 1 | ham | Ok lar... Joking wif u oni... |

| 2 | spam | Free entry in 2 a wkly comp to win FA Cup fina... |

| 3 | ham | U dun say so early hor... U c already then say... |

| 4 | ham | Nah I don't think he goes to usf, he lives aro... |

messages['label'] = messages['label'].map({'ham':0,'spam':1})

messages.head()

| label | text | |

|---|---|---|

| 0 | 0 | Go until jurong point, crazy.. Available only ... |

| 1 | 0 | Ok lar... Joking wif u oni... |

| 2 | 1 | Free entry in 2 a wkly comp to win FA Cup fina... |

| 3 | 0 | U dun say so early hor... U c already then say... |

| 4 | 0 | Nah I don't think he goes to usf, he lives aro... |

messages['label'].value_counts()

0 4825

1 747

Name: label, dtype: int64

X_train,X_test,y_train,y_test = train_test_split(messages["text"],messages["label"], test_size = 0.2, random_state = 10)

v = CountVectorizer()

v.fit(X_train)

CountVectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.int64'>, encoding='utf-8', input='content',

lowercase=True, max_df=1.0, max_features=None, min_df=1,

ngram_range=(1, 1), preprocessor=None, stop_words=None,

strip_accents=None, token_pattern='(?u)\\b\\w\\w+\\b',

tokenizer=None, vocabulary=None)

train_df = v.transform(X_train)

test_df = v.transform(X_test)

hamwords = ''

spamwords = ''

hamw = messages[messages['label']==0]['text']

spamw = messages[messages['label']==1]['text']

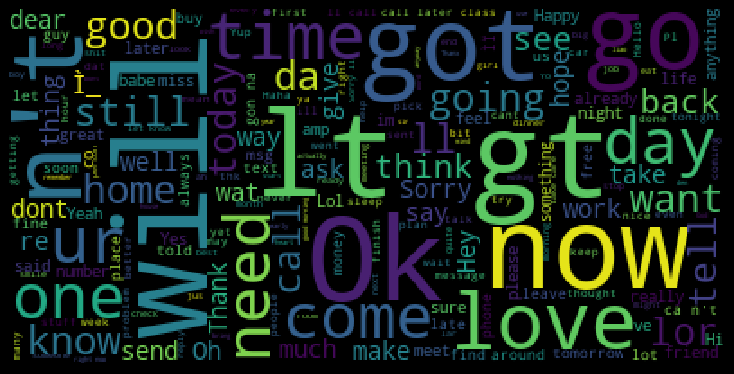

for row in hamw:

words = word_tokenize(row)

#print(word)

for x in words:

hamwords += x + ' '

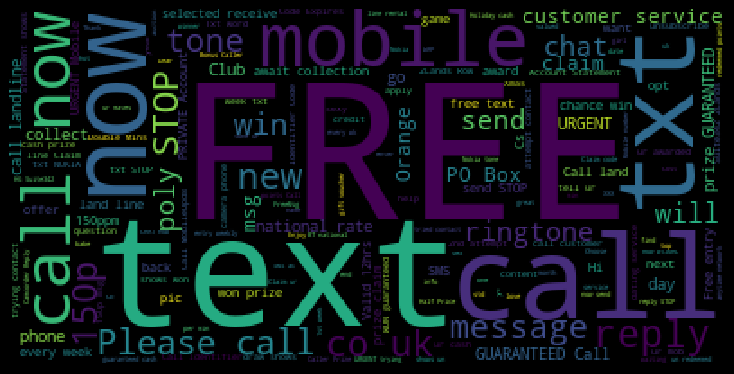

for row in spamw:

words = word_tokenize(row)

#print(word)

for x in words:

spamwords += x + ' '

wc_spam = WordCloud().generate(spamwords)

wc_ham = WordCloud().generate(hamwords)

#Spam Word cloud

plt.figure( figsize=(10,8), facecolor='k')

plt.imshow(wc_spam)

plt.axis("off")

plt.tight_layout(pad=0)

plt.show()

#Ham word cloud

plt.figure( figsize=(10,8), facecolor='k')

plt.imshow(wc_ham)

plt.axis("off")

plt.tight_layout(pad=0)

plt.show()

type(train_df)

scipy.sparse.csr.csr_matrix

print(train_df.shape, test_df.shape)

(4457, 7757) (1115, 7757)

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(train_df,y_train)

C:\Users\I330087\AppData\Local\Continuum\anaconda3\lib\site-packages\sklearn\linear_model\logistic.py:432: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='warn', n_jobs=None, penalty='l2',

random_state=None, solver='warn', tol=0.0001, verbose=0,

warm_start=False)

predictions= model.predict(train_df)

accuracy_score(y_train,predictions)

0.99798070450976

predictions= model.predict(test_df)

accuracy_score(y_test,predictions)

0.9802690582959641